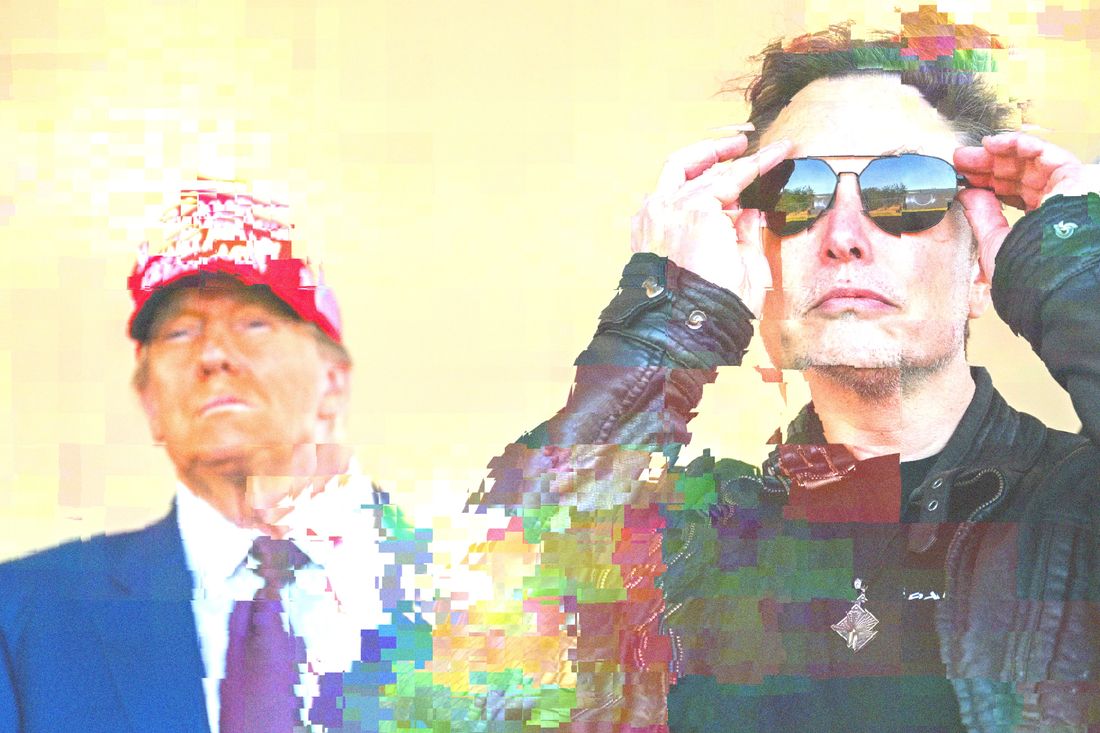

Photo-Illustration: Intelligencer; Photo: Getty Images

After buying Twitter, Elon Musk rebuilt it to his own specifications and preferences. This resulted in an environment we may gently call friendlier to the discussion and promotion of right-wing politics. The goal motivated his purchase and subsequent management and product decisions, and people who still use the platform will agree, in a narrow sense, that its character has changed. From the left: Elon Musk turned Twitter into 4chan for government officials and tech workers. From the right: Elon Musk killed Twitter, created X, and saved civilization.

This obvious and very public transformation has remained a subject of dispute for a few reasons. For one, it has been a gradual process, a slow accumulation of user migrations, changes to the platform’s policies and features, and the evolution of new dominant communities alongside older declining ones. This makes its progress hard to track. For another, as an environment for intra-elite communication, signaling, and coordination, it has been pretty resilient — at the very least, a lot of powerful people still produce newsworthy material there — meaning a lot of people who are unsympathetic or uninterested in its new direction have nonetheless found reasons to stick around. Everyone with exposure to X knows it has changed, but it’s harder to say how much and with what results.

At The Argument, Lakshya Jain brought some data to the discussion, conducting a large national survey segmented by respondents’ preferred online news sources. The results were pretty stark:

As an example, even though ICE’s net favorability rating is at -26 percentage points with all voters, it’s almost break-even with people who get their news from Twitter. Compare that to other social media platforms like Reddit and TikTok, where over 70% of voters viewed the agency unfavorably.

In the survey, conducted in January, X users were the only group in which a majority, just barely over 50 percent, expressed “strong” or “somewhat” approval of Donald Trump. His approval was significantly lower among consumers of news from “podcasts and YouTube,” local television, and even Facebook. Among people reading “newspapers or news websites,” browsing Reddit, watching broadcast television or scrolling TikTok or Instagram to keep up with current events, the numbers were, as Jain described them, “catastrophic.” He noted, “If you’re largely getting your news from Twitter, you might not even know that Trump is unpopular, because you wouldn’t even see a lot of the backlash.”

Last week, in a study published in Nature, a group of researchers attempted to answer a sensible follow-up question: So what? People organize around news sources that flatter their beliefs, and in a fragmented news environment, you would expect different attitudes to be associated with venues that have developed a clear partisan identity. Well, it turns out that the engine of Musk’s X — its algorithmic “For You” page — is an ideological ratchet:

In addition to promoting entertainment, X’s feed algorithm tends to push more conservative content to users’ feeds. Seven weeks of exposure to such content in 2023 shifted users’ political opinions in a more conservative direction, particularly with regard to policy priorities, perceptions of the criminal investigations into Trump and views on the war in Ukraine. The effect is asymmetric: switching the algorithm on influenced political views, but switching it off did not reverse users’ perspectives on policy priorities or current political issues.

The effect was surprisingly pronounced considering the comparatively less insane conditions on the platform, and across politics in general, in 2023. In the space of a couple of months, users consuming X’s algorithmic feeds were both “4.7 percentage points more likely to prioritize policy issues considered important by Republicans” and “5.2 percentage points less likely to reduce their X usage.” Taken together, these analyses offer a bit of data to support the notion that X has become a place that both attracts more conservatives and pushes them further to the right, resulting in an X-obsessed administration that often uses the bizarre language of Zoomer fascists when posting online.

They also support the argument that increasingly algorithmic platforms — on which feeds centered around user-to-user connections have been either crowded out or replaced by feeds that serve posts based on prediction and user feedback — are a force for ideological persuasion. This is intuitive if you imagine algorithmic recommendations as automated editorial processes or perhaps like targeted ad networks. They’re going to end up promoting something or being manipulated to that end. Fears of algorithmic persuasion are widely held and have been consequential. After the 2016 election, Mark Zuckerberg was forced to confront the question, posed by many in the media, of whether Facebook might have swayed the outcome. More recently, lawmakers’ fear that TikTok’s algorithm was promoting Chinese propaganda, or vilifying Israel, helped prompt a legal ban and forced sale.

In the past, though, the concern about algorithmic persuasion — What is Facebook doing to the nation’s elderly voters? Is TikTok radicalizing the kids against capitalism? — has often been an elite obsession, in which people who think of themselves as unusually informed and savvy worry about the manipulation of the masses by machines dumber than they are but smarter than everyone else. This was a particularly popular theory on Twitter itself, which, more than any other platform, was built to feel like a simulation of the public discourse and attracted people who felt entitled to be a part of it. The Muskification of Twitter into X — the MAGA platform of choice, where Musk’s tweets and the platform’s recommendations are unavoidable and the house chatbot is an outspoken rightist — may also be influencing the elites who still use it. Could it be happening to you, too? Are you … sure?