[ad_1]

Meta’s updating its AI content labels to ensure that a broader range of synthetic content is being tagged, in response to a growing flood of generative AI posts in the app.

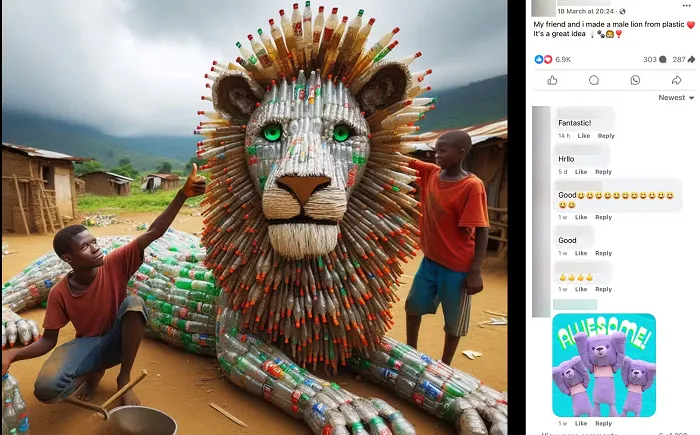

Over the past few months, more and more AI engagement bait has been showing up on Facebook, and has also been generating a heap of engagement.

Like, even the slightest level of scrutiny would reveal that this is not a real image. But Facebook is used by billions of people, and not all of them are going to zoom in, or have the digital literacy to even be aware that generative AI can even be used in this way (as denoted by the 6.9k Likes on this post).

As such, Meta’s updating its AI labeling policies to ensure that more AI-generated content is tagged and disclosed accordingly.

As per Meta:

“Our existing approach is too narrow, since it only covers videos that are created or altered by AI to make a person appear to say something they didn’t say. Our manipulated media policy was written in 2020 when realistic AI-generated content was rare and the overarching concern was about videos. In the last four years, and particularly in the last year, people have developed other kinds of realistic AI-generated content like audio and photos, and this technology is quickly evolving.”

The new process will see more “Made with AI” labels being appended to content when Meta detects “industry standard AI image indicators or when people disclose that they’re uploading AI-generated content”.

Meta says that it will also leave more AI generative content up in its apps, as opposed to removing it, with the labels serving both an informational and educational purpose.

“If we determine that digitally-created or altered images, video or audio create a particularly high risk of materially deceiving the public on a matter of importance, we may add a more prominent label so people have more information and context. This overall approach gives people more information about the content so they can better assess it and so they will have context if they see the same content elsewhere.”

In other words, the labels will immediately inform the user that the content is fake, while also showing what can now be done with AI, which will help to increase awareness of the same.

It’s a good update, though a lot here does depend on Meta’s ability to be able to detect generative AI within posts.

As noted, in the above example (and the many others like it), it’s very obvious to most that it’s been AI generated. But as AI systems improve, and people learn new ways to use them, these are also going to get harder to detect, which could limit Meta’s automated capacity to detect such.

But then again, the new approach will also give Meta’s moderators more enforcement powers over the same, and it could very well serve an important purpose in raising awareness of what can be done with AI fakes.

Time will tell, but it does seem like a good update, which could have an impact on expanding AI use.

Meta says that it will implement its new AI labeling process in May.

[ad_2]

Source link